Electroencephalography (EEG) is a remarkable tool. By simply placing electrodes on the scalp, we can listen in on the brain’s electrical symphony, gaining important insights into everything from sleep patterns and epilepsy, to how our brains process information.

But there’s a major challenge: the brain’s signals are incredibly faint -on the order of a millionth of a volt. Analysing an EEG recording is like trying to hear music through your headphones with loud noise in the background. Eye blinks, muscle tension, and environmental electrical noise can easily drown out the neural activity we want to study.

For decades, scientists have grappled with this “artifact” problem. Cleaning EEG data often requires painstaking manual work by experts or computationally heavy algorithms that come with their own limitations. This has been a significant bottleneck, preventing EEG technology from reaching its full potential, especially in real-world applications like brain-computer interfaces (BCIs) and mobile health monitoring.

Recent work led by researchers at the Center for Biomedical Imaging, University of Geneva and collaborating institutions introduces a powerful new tool that promises to change the game: the Generalized Eigenvalue De-Artifacting Instrument (GEDAI).

A Smarter Way to Denoise: Leadfield Filtering

Traditional methods often try to identify artifacts based on their statistical properties. For example, some methods look for signals that are too large or don’t follow a typical distribution. The problem is, they operate “blindly,” without any fundamental knowledge of how a brain signal is generated.

GEDAI takes a completely different, “theoretically informed” approach called leadfield filtering. Imagine you have a perfect blueprint of the brain that describes how any electrical activity generated inside the brain should project onto the scalp electrodes. This blueprint is known as the “forward model” or “leadfield”. GEDAI uses the forward model to define the unique fingerprint characteristic of signals that spatially originate in the brain, allowing it to filter out any non-matching activity.

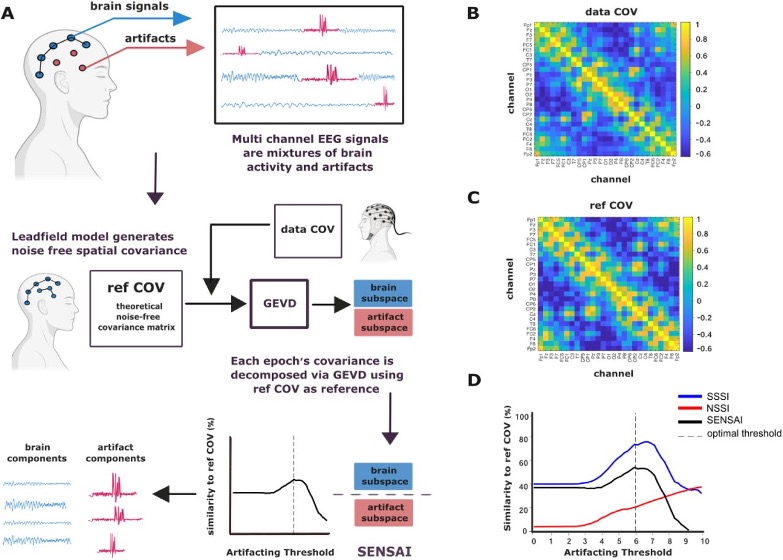

- Get the Blueprint: GEDAI starts with a pre-computed leadfield model that represents the spatial signature of true brain activity. This becomes its theoretical “clean” reference covariance matrix (refCOV, Fig 1C)

- Compare and Decompose: It then looks at a small chunk of the recorded, noisy EEG data (dataCOV, Fig 1B) and uses a powerful mathematical technique called Generalized Eigenvalue Decomposition (GEVD) to compare the two. GEVD separates the signals into components, ranking them by how much they deviate from the clean blueprint. Signals that don’t match the brain blueprint are flagged as likely noise/artifacts. The most deviant components (i.e. those with the largest eigenvalues) are pushed to the top of the list, much like oil floats on top of water.

- Find the Perfect Cutoff: But how do you decide where to draw the line between brain signal and noise? GEDAI introduces a second innovation called the Signal & Noise Subspace Alignment Index (SENSAI, Fig 1D). SENSAI automatically tests different thresholds for removing noisy components and finds the sweet spot that (1) maximizes the similarity between the denoised (clean) data and the theoretical blueprint, while (2) minimizing the similarity between the discarded (noisy) data and the theoretical blueprint.

- Reconstruct the Signal: Finally, GEDAI reconstructs the EEG signal using only the components that SENSAI identified as consistent with genuine brain activity.

Putting GEDAI to the Test

The CIBM research team put GEDAI through a rigorous series of tests, comparing it against three state-of-the-art automated denoising methods: a fast method based on Principal Component Analysis (PCA) and two popular methods based on Independent Component Analysis (ICA). They created thousands of simulated datasets, using both synthetic and real “clean” EEG recordings, and then added controlled amounts of realistic artifacts like eye movements (EOG), muscle tension (EMG), and bad channels (NOISE). These tests were designed to push the algorithms to their limits by varying:- Signal-to-Noise Ratio (SNR): From very noisy (-9 dB) to less noisy (0 dB).

- Temporal Contamination: From mildly corrupted (25% of the data) to completely saturated with artifacts (100%).

- Artifact Type: EOG, EMG, NOISE, and—most realistically—a complex mixture of all three.

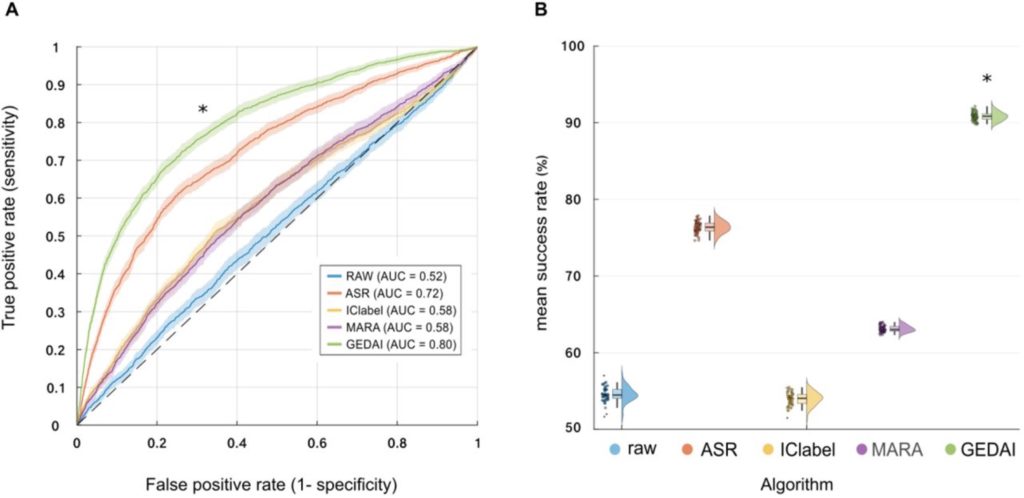

- ERP Classification: They used a dataset where participants viewed either a common (white square) or a rare (Einstein’s face) image. The goal was to see if an algorithm could predict which image a person saw based on a single trial of their EEG data. After cleaning the data with GEDAI, the classification accuracy soared to an Area Under the Curve (AUC) of 0.80 -significantly outperforming the next-best algorithm (0.72).

- Brain Fingerprinting: In this intriguing task, the goal is to identify an individual from a group of 100 people based solely on their unique resting-state EEG patterns. Again, GEDAI was the clear winner, achieving a 91% success rate in identifying subjects, compared to 76% for the next-best algorithm.

The Future is Clean

GEDAI represents a significant step forward for EEG-based neuroimaging and its applications. By leveraging a theoretical model of the brain, it provides a fast, robust, and fully automated way to denoise heavily contaminated data without needing “clean” reference segments or expert supervision. Its success in challenging conditions makes it a promising tool for mobile EEG, dry electrodes, and clinical settings where data quality is often compromised. The authors suggest its principles could be extended to other brain imaging techniques like magnetoencephalography (MEG). In the spirit of open science, GEDAI has been made available as an open-source EEGLAB plugin, allowing the research community to both test and benefit from this powerful new tool.Read the full preprint:

Ros, T., Férat, V., Huang, Y., et al. (2025). Return of the GEDAI: Unsupervised EEG Denoising based on Leadfield Filtering. bioRxiv. https://doi.org/10.1101/2025.10.04.68044938

See the videos:

https://youtu.be/a4bb2wF_AEk?si=s60LrxzoqizkmA_4 [CIBM logo needed on videos]

Get the code:

GEDAI is available as an open-source EEGLAB plugin at https://github.com/neurotuning/GEDAI-master.